Summary

In this talk, Dr. Deng

summarized their research work in the field of sentiment analysis aiming at

understanding the opinions in the text. As she discussed, sentiment can be generally

divided into three categories - positive, negative and neutral, and

specifically the positive and negative are more people’s concerns. Much work in

sentiment analysis and opinion mining have been done at both general document

level as well as fined grained levels such as sentence level, phrase level and

aspect level. Deng and her colleagues make a contribution at entity/event

level.

Dr. Deng indicated that some

implicit sentiment cannot be recognized merely relying on sentiment lexicons.

For example, in the following sentences:

Sentence: It is great that the bill was defeated.

There is a positive

sentiment explicitly expressed via the word “great”, and the target of this sentiment is the fact that “the bill was defeated”. It is not

difficult to detect that the writer or speaker shows a positive attitude

towards the fact. But it becomes more complicated to identify the person’s

attitude towards the target “bill”.

Dr. Deng stated that compared to detecting the explicit sentiment, it requires

inference to recognize the implicit sentiment.

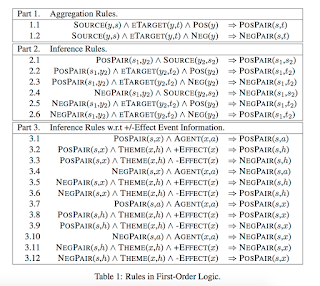

To achieve the reference goal,

she and her colleagues develop inference rules (Table 1), and conduct extensive

experiments to demonstrate the inference ability of their rules. Besides, they

also developed computational models aiming at automatically infer sentiments.

In their model, they not only take into consideration the information from sentiment

analysis but also from other Natural Language Processing tasks. Results show

that their framework simultaneously beats the baselines by more than 10 points

in F-measure on sentiment detection and more than 7 points in accuracy on GoodFor/BadFor

(gfbf) polarity disambiguation.

Details about talk:

Title: Entity/Event-level

Sentiment Detection and Inference

Speaker: Lingjia Deng